How Western University's in-vehicle camera monitors state of drivers

The technology behind self-driving cars is rapidly evolving and "the more control the automated system has, the more freedom the driver is given," explains professor Soodeh Nikan from Western University's Faculty of Electrical and Computer Engineering.

The school is at the forefront of advancing technology that could revolutionize the way we drive. With a focus on safety, particularly in workplace settings, researchers are building on Level 3 autonomous vehicles that could allow drivers to temporarily shift their attention from the road under certain conditions.

Eyes on the driver

All drivers know the importance of keeping their eyes on the road. However, in the emerging realm of self-driving cars, the focus is shifting towards the road — or rather, the car — keeping its 'eyes' on the driver. The advancements could ultimately lead to improved safety, especially in workplace settings, like public transportation, long-haul trucking, and any other occupations that involve regular driving.

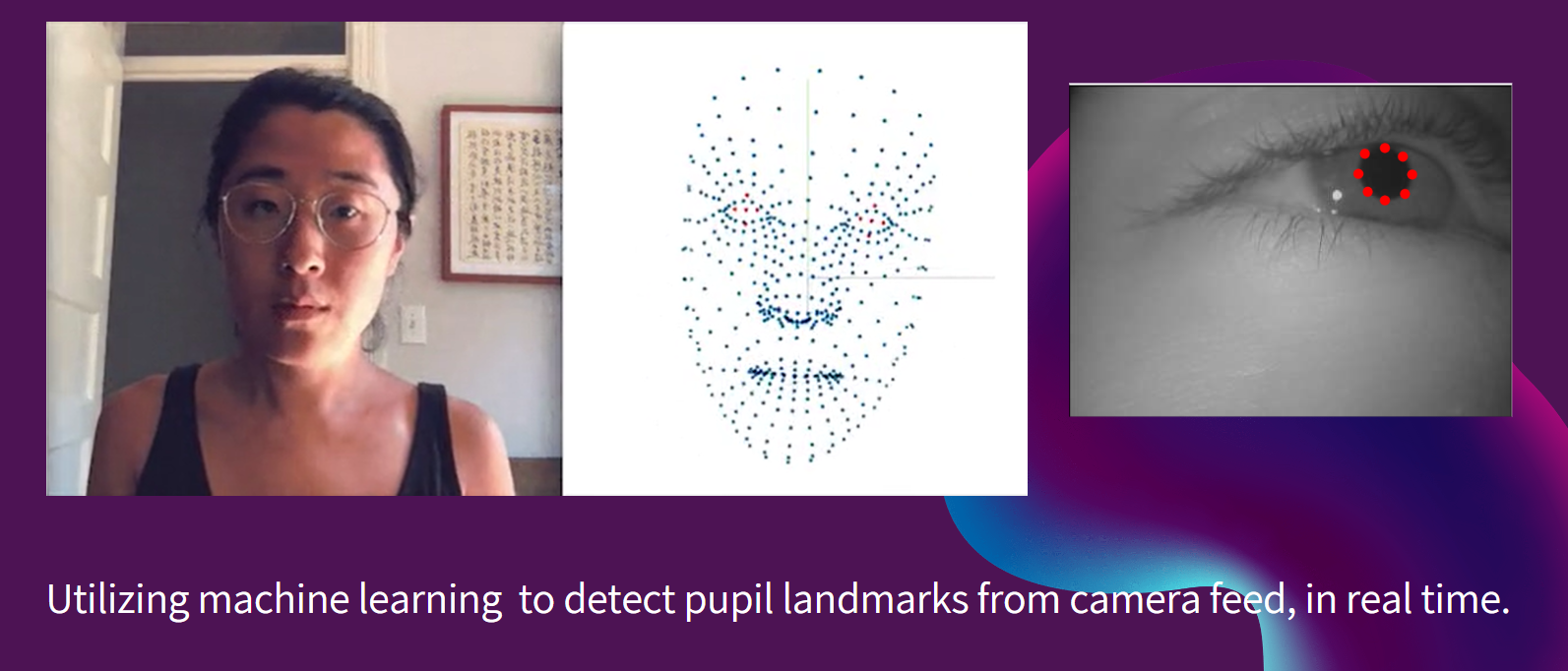

Unlike current Level 2 self-driving cars that only monitor a driver's visual attention, the technology developed at Western University goes beyond by utilizing a simple in-vehicle camera to measure pupil size, facial changes, and skin colour alterations. These indicators help identify situations where drivers might be under stress, distracted, impaired, or experiencing other conditions that prevent them from safely resuming control.

When asked about the potential implications for health and safety professionals managing vehicle fleets, Nikan says the cameras provide real-time insights into drivers' conditions, enabling managers to take immediate action. "This technology can mitigate potential legal or financial liabilities by alerting managers to any concerning behavior or conditions exhibited by drivers, ensuring the safety of workers on the road," claims Nikan.

Partners in advancing technology

Under the guidance of Nikan, the school has tapped into the expertise of Harshita Mangotra, an undergraduate student from Indira Gandhi Delhi Technical University for Women in India.

Mangotra's role has been instrumental in creating the in-vehicle camera. Her expertise in computer vision and AI algorithms enabled her to accurately measure changes in pupil size in real-time, a crucial indicator of a driver's cognitive load. Through extensive analysis of more than 20 million video images, Mangotra identified the most effective machine learning model for precise pupil size estimation.

The collaboration was made possible through Mitacs Globalink Research Internship. “The real difference between Canada and India was the teamwork I discovered in the research lab,” says Mangotra. “It helped me to complete this project in such a short time, and I couldn’t have done it without the support of my peers.”

"By monitoring drivers' cognitive load, emotional state, and overall well-being, we can ensure that drivers are in a position to make critical decisions when needed," explains Nikan. She says while Level 3 cars are making their way into markets in places like California and Japan, these vehicles will still require human intervention in certain scenarios.

The potential impact of this technology is not far off. "In the US, there are plans to have technology preventing impaired drivers from operating vehicles by 2026," says Nikan. And Europe is set to make driver monitoring systems a safety standard for new car models by 2024.